We will primarily be talking about agents in the framework of moving particles. Because agents are so general, almost anything can be described as a multi-agent system. As such the study of multi-agent systems is most relavent in fields where precise descriptions are difficult. Agents are a common approach to modeling problems in behavioral economics and ecology. These domains involve the interaction of a large number of individuals who may having varying roles or properties as well as complex environmental conditions. These problems are difficult or impossible to describe in a precise mathematical formulation. Agents are also often used in computer graphics to allow the animation of crowds without directly controling each character.

The idea of the model is that each agent wants to be a certain distance from its neighbors and it also wants to go in the same direction as its neighbors. We define a set of rules or behaviors that govern each agent so that it will work towards that condition. In the simplest boid implementation there are three rules:

separation

alignment

cohesion

Cohesion. The desire to be in a group or attract to other agents. We model this as moving toward the centriod of the local neighborhood. We could think of this as a force towards average of the neighborhood.

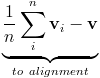

Alignment. Each agent wants to fly in the same direction as its neighbors. We want the velocity to tend to be the same between neighboring agents.

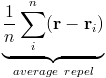

Separation. Agents don't like to be too close to each other. If agents are within a certain distance of each other, they repel.

The other important component is the neighborhood. In the simplest case, every agent within a certain radius is a neighbor. However, in reality many agents cannot see behind them. So we can also define the neighborhood as with a distance and an angle. Every agent within the area swept out by a line going from straightword (determined by velocity) and rotate by some angle is in the neighborhood. This can easily be determined by measuring the angle between the velocity vector and the vector from the current agent to a potential neighbor.

We may even want different rules to have different neighborhoods. An agent is only interested in avoiding agents that are very close and only interested in follow agents that are in front of them.

In order to quickly find the neighborhood, we need a fast way to find agents within a certain radius. We can use a simple binning data structure, as we have used in the past. There are also more complex collision detection algorithms which can account for unbounded spaces and variable radii.

We can think about these different behaviors as forces, where cohesion would mean the agent is attracted to the centroid of the neighborhood, or we can think of them in terms of "steering". This means we think of our agent like a vehicle, which if it wants to go towards something it turns or steers towards it. So primarily what we are changing is direction, not magnitude. If we are already going towards the center, we do not need to accelerate towards it. This entails taking the desired velocity, subtracting the current velocity, and using that as your acceleration.

Largely because 'multiagent simulation' is such an [over]broad term, there are lots of exntesions and refinements that people explore.

One way to think about how the field is organized is by looking at which piece of the generic multiagent model you're tweaking:

- agents :: Do they change over time? Can they talk to one another? Do they all follow the same rules at the same time? Are their rules spatially dependent?

- environment :: Does the environment change over time? Is it affected by different agents differently? Or does it only affect agents? What sort of information does it give to the agents?

- what sorts/species of agents are there

- the messages agents pass

- the way agents' behavior can change, and

- what determines to whom the agents can pass messages

- lifespan (meaning you'd want to have some way of keeping track of how long each agent was alive)

- changing speed with age (meaning each agent would need some parameter that individually controlled their speed)

- flocking behavior within bunny families (meaning each agent would need some way of recognizing and locating their family members—perhaps agents can ask for a 'name' from everyone they see)

- reproduction (meaning every time a boy bunny meets a girl bunny, there's some probability another bunny pops up)

- pursuit and evasion (meaning bunnies and wolves would not only need to be able to find nearby bunnies and wolves, but figure out in what direction their neighbors were moving)

For a reasonably clear overview of the strategies and ideas that the multiagent community has developed, check out Shoham's Multiagent System textbook for a pretty comprehensive survey of the issues we'll touch on here.

agent-agent interactions

In exploring how to modify and extend agent-agent interactions, it can be helpful to think about what the agents are doing in terms of message-passing. From this angle, there are four dimensions of agent-agent behavior you can think about:The first dimension is the most obvious: can you have more than one type of agent? Plenty of multiagent systems (e.g. flocking) stick with a single species and still create interesting, large scale behavior. There are a lot of systems which call for different groups or species of agents: simulation of predator and prey interactions on a scale, exploration of voting patterns and behavior, and modeling of urban environments involving a mix of different vehicles and pedestrians (sometimes getting as finely grained as accounting for children and elderly people's different modes of motion).

With the species of agent in mind, it's worth asking what sort of messages agents can pass around. In examples like flocking, agents communicate with one another simply by being near to one another. You can start to extend this by making it possible for agents to look at other characteristics of their neighbors, like speed, acceleration, and direction, and having the agents respond in some way.

What way? The range of possible agent behaviors is another dimension along which people extend and play with multiagent systems. What can your agents do? Do they leave a trail? Do they just move around? Can they get more massive? Change shape? Do they reproduce?

The last piece of local interactions that you want to keep in mind as you look at how people are modeling multiagent systems is the agent's "neighborhood." These dimensions all get subtler as your agents get more sophisticated—for an introduction to some of the tactics used in simulating people and crowds in the multiagent paradigm, take a look at "Scalable behaviors for crowd simulation".

a high level example

Ecology is one field that has found a lot of applications for multiagent simulation. People have done everything from reproduce pack hunting behaviors to simulate the population dynamics of predator and prey in a complex food web.Let's take a look at the predator-prey question. In the wild, you have bunches of different species of plants and animals. Everyone wants to eat someone, and most everyone wants to avoid being eaten by someone else. Starting in the simplest case, a predator-prey multiagent system is a 2-agent system seeded in a big, flat plane, randomly. At a first pass, you might make the bunnies and wolves move around randomly, and whenever they ran into each other, the bunnies would die. The bunnies and wolves could start out by moving randomly, or by diffusing, or you could introduce pursuit and evasion behavior, and eventually add things like reproduction and natural—well, not wolf-induced—death. Going beyond this, there are—as is often the case with multiagent simulations—an enormous number of refinements you can make. The goal to keep in mind is the behavior you're after. An accurate model is different than an effective one. Some possible extensions include:

agent-environment interactions

A lot of the questions people ask with multiagent systems move beyond just agents talking to one another (as in flocking) and start to put their agents in environments that change in space and time. In our predator-prey example, that might mean anything animals' top speeds changes from area to area. Or, it could mean that it becomes less likely for a wolf to notice a bunny if the bunny is in a specific area (that we might be imagining as "underbrush"). But, environments can do more than just affect the behavior of agents— can affect environments, as well.In the ecological domain, lots of multiagent simulations have agents affect the environment by consuming resources or altering landscape to start to get at ideas of "carrying capacity." To return to our predator and prey example, your wolves and bunnies could be moving on a grid where each square contains an amount of some resource (e.g. grass) that is consumed by the bunnies as they travel through it and is replenished at some rate. Seeding the initial stage with different distributions of grass will create different long term behavior in terms of where the bunnies aggregate and grow.

Another common extension of agent-environment interactions is for agents to leave trails (which may decay with time) that either influence other agents (as in the case of ants that follow one another's pheromones) or alter the environment. Implementing this starts with some way for agents to record where they have been—this might mean changing the color of a pixel or storing more complicated information in a matrix that other agents refer to as they travel from point to point. To get some sense for how people implement these extensions—and what sorts of behaviors they can create—check out Hawick's "Complex Emergent Behaviour from Spatial Animat Agents" and try to ignore the ridiculously liberal sprinkling of buzzwords.

extending and evolving agents and environment

There are lots of ways that people extend the capability of agents in multiagent simulations. People throw in evolution of specific traits, they create languages that the agents can use to pass more and more sophisticated messages, and they do things like give agents memory (so in the predator prey example, people have created systems where individual predator and prey evolve different strategies for pursuit and evasion, and the wolves and bunnies can essentially do things like "rememember" that a particular bunny is likely to dodge right if you run straight in, etc.) We're going to focus on techniques for evolving agents, but if you're interested in the AI/learning side of multiagent learning, check out the review articles, "Cooperative Multi-Agent Learning: The State of the Art" (which covers a handful of ways in which agents evolve and are extended in multiagent simulations).One of the most general and useful techniques for evolving agents is genetic algorithms. The overall idea of genetic algorithms is inspired by evolution. In nature, new genomes appear either through mutation or through two individuals mating, and this creates a unique individual that tries to make its way in the world, surviving long enough to reproduce.

Genetic algorithms generalize this process. The basic idea is that you encode everything which interests you about an agent into a "genome." You then let your agent make its way in your virtual world, and based on some definition of "fitness" or "quality" or "utility" you select some of your population, take their genomes, and mix them up a bit, creating a new generation. Rinsing and repeating, over time your agents "evolve."

This description waves off a lot of the details, so lets look a little closer at a specific example that returns to our predator-prey model.

a high level example

This example is drawn from Theo Jansen's work, a Dutch artist and kinetic sculptor famous for his mechanical beach beasts made from plastic tubing.One of his earliest explorations in creature creations was entirely digital. His agents were small animals shaped like lines that flew around, bounced off walls, and killed each other when they ran into another one. So even simpler than a two-agent, predator-prey system, it was a one-agent system where everyone is a predator and a prey.

But looking at the digital representation of these animals, they were broken up into four segments, each of which could be straight, curved clockwise, or curved counterclockwise. Each generation, surviving animals would get copied, but there was some probability of a mutation. If an animal started out straight, there was some likelihood that its child would have a kink.

Over time, he found that the population evolved toward being totally curled up: minimizing their cross sectional area, and thus minimizing the probability that they'd be stabbed by another animal.

You're already familiar with what it takes to make the animals move around, and to detect when they run into each other (and thus die). But how to achieve the evolutionary piece?

The first step is to figure out how to encode the properties of the creature. In this case, one way to do it would be to choose a number to represent a straight, clockwise, and counterclockwise segment. In this case, the "genome" for each animal could simply be four numbers. So, lets say we chose -1 to be counterclockwise curved, 0 straight, and 1 clockwise curved. 0000 would be a straight animal, 1111 a totally curved one, and 010-1 some mix.

Each generation, we could make copies of say one out of every ten of the animals, and maybe one out of every ten of those would not get copied exactly, but would get one of their segments assigned randomly.

A few minutes later, after they bounce around for a while, killing each other off, the cycle repeats.

For some images from Jansen's original work, check out this excerpt from his book, The Great Pretender. For more in-depth explanations of the basic process behind creating genetic algorithms, check out this tutorial.

- Try creating a multiagent simulation with more than one type of agent. Have those agents interact with trails left by other agents.

- Try implementing and extending Jansen's simulation to allow the animals to grow in each generation.

- Take a look at NetLogo—a multi-agent programmable modeling environment—for some inspiration of systems to model, as well as examples of how people implement a lot of these strategies. Note that NetLogo has its own language, but it's pretty high level, and this tutorial should get you started.

Craig Reynolds' Page on Boids

Daniel Shiffman's Tutorial on Steering Behaviors

"Complex Emergent Behaviour from Spatial Animat Agents"

"Cooperative Multi-Agent Learning: The State of the Art"

"Scalable behaviors for crowd simulation"

Multiagent Systems - Algorithmic, Game Theoretic, and Logical Foundations

"Theo Jansen - The Art of Creating Creatures (TED Talk)"